Recent advances in artificial intelligence (AI) have renewed hopes that these tools can support digital deliberation. Much of the discussion focuses on evaluating people’s reactions to AI-enabled deliberation once they encounter it. That’s useful—but it misses a prior question:

Does merely signaling that deliberation will use AI affect people’s willingness to participate? Given widespread skepticism toward AI, announcing its use in deliberative formats may deter participation. Thus, even if AI can technically enhance deliberation, it may also weaken it by keeping people from taking part in the first place.

Together with Adrian Rauchfleisch, I examine this question in a new article just published in Government Information Quarterly.

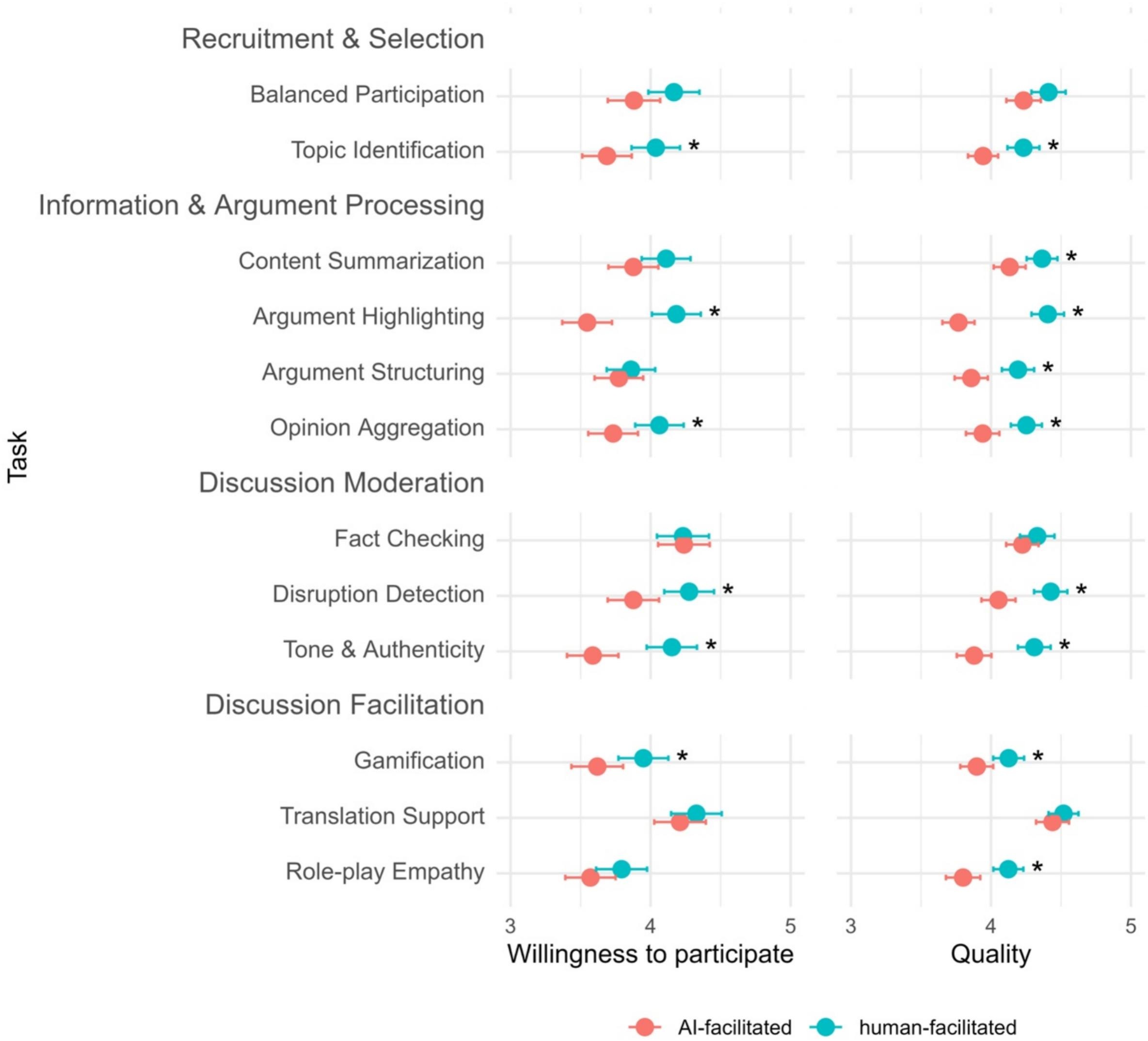

Using a preregistered survey experiment with a representative sample in Germany (n = 1,850), we test how people respond when told that specific deliberative tasks will be performed by AI versus by humans.

We find a clear AI penalty in deliberation: informing people that AI will be involved lowers both willingness to participate and expectations of deliberative quality.

This AI penalty is moderated by prior attitudes toward AI—people already skeptical of AI react more negatively—highlighting the emergence of a new deliberative divide rooted in views about AI rather than traditional factors like demographics or education.

As democratic practices move online and increasingly leverage AI, understanding and addressing public perceptions and hesitancy will be critical.

Read the full article here.

Abstract: Advances in Artificial Intelligence (AI) promise help for democratic deliberation, such as processing information, moderating discussion, and fact-checking. But public views of AI’s role remain underexplored. Given widespread skepticism, integrating AI into deliberative formats may lower trust and willingness to participate. We report a preregistered within-subjects survey experiment with a representative German sample (n = 1850) testing how information about AI-facilitated deliberation affects willingness to participate and expected quality. Respondents were randomly assigned to descriptions of identical deliberative tasks facilitated by either AI or humans, enabling causal identification of information effects. Results show a clear AI penalty: participants were less willing to engage in AI-facilitated deliberation and anticipated lower deliberative quality than for human-facilitated formats. The penalty shrank among respondents who perceived greater societal benefits of AI or tended to anthropomorphize it, but grew with higher assessments of AI risk. These findings indicate that AI-facilitated deliberation currently faces substantial public skepticism and may create a new “deliberative divide.” Unlike traditional participation gaps linked to education or demographics, this divide reflects attitudes toward AI. Efforts to realize AI’s affordances should directly address these perceptions to offset the penalty and avoid discouraging participation or exacerbating participatory inequalities.

Andreas Jungherr and Adrian Rauchfleisch. 2025. Artificial Intelligence in deliberation: The AI penalty and the emergence of a new deliberative divide. Government Information Quarterly 42(4): 102079. doi: 10.1016/j.giq.2025.102079